Carried datasets, SQLite and Gaelic heritage

A while ago, Simon Willison expressed the idea that SQLite enables a very neat pattern whereby software can carry its datasets in the form of SQLite databases. Such a database is to be used only to read from, and actually presents a very neat, portable, universal data structure for querying a dataset that would otherwise need to be loaded into memory and structured manually. For dynamic and interpreted languages this is actually even more relevant, because loading a sizeable chunk of data from source code involves running the actual language parser over that dataset. That can be quite wasteful. Recently, I’ve bumped into a number of cases where I could apply that pattern, and the results have been delightful!

The dark art of name segmentation

Name segmentation is used in information systems for comparing, searching, validating and outputting people’s names. Segments are parts of a full name, and have different significance when searching, sorting or comparing. It is a thorny affair because a name can be segmented very differently, depending on the culture. For example, if you take my name from my official documents - YULIAN ALEKSEEVITCH TARKHANOV - it can be segmented in a number of different ways:

{first_name: "YULIAN", patronym: "ALEKSEEVITCH", surname: "TARKHANOV"}{first_name: "YULIAN ALEKSEEVITCH", surname: "TARKHANOV"}{surname: "YULIAN", first_name: "ALEKSEEVITCH TARKHANOV"}{first_name: "ALEKSEEVITCH", title: "YULIAN", surname: "TARKHANOV"}

The third variant is likely in systems which have been created in Japanese or Hungarian cultural tradition. Funnily enough, for a native Russian speaker the “last name then first name” address can not only be incorrect, but is also a known case of use of “kantselarit” (the boasty, inscrutable officalized language) or a way of addressing a pupil at kindergarten or at school, so it’s not only incorrect but can be slightly offensive.

Either way, the segmentation – when done right - should give the following outcomes:

- My name should sort on

TARKHANOVin almost all scenarios - When initials are desired, the name should collapse to

YA TARKHANOV - When just the surname must be extracted, it should deliver

TARKHANOV

And one of the interesting bits of segmentation are compound surnames and surnames containing particles. For example OʼSullivan - like in a video you should really check out can be segmented as follows:

{particle: "O", surname: "SULLIVAN"}{surname: "OʼSULLIVAN"}{surname: "O'SULLIVAN"}- note how the apostrophe has been converted into a single quote!{surname: "OSULLIVAN"}- note how the apostrophe has been removed entirely

This reveals an interesting necessity - when searching for a name, for example, you need to search for a “normalized form” of it. All of the following search queries:

O SullivanSullivanOsullivanOʼsullivan

should return that name, regardless of how it’s been entered into the system. This is decently handled by systems that normalize tokens before at ingest - like Lucene - have sophisticated plug-in approaches for doing this token pre-processing. But we can actually go a bit differently, and not have to introduce ElasticSearch or OpenSearch into our stack at all.

🧭 I am currently available for contract work. Hire me to help make your Rails app better!

A data-based approach

If we want to segment our names correctly, one of the ways to do it is to start from the culture the name comes from. In this case - Irish culture. The “Oʼ” particle means “of” – it signifies belonging to a family. And most Irish surnames have existed for a long time. What if there actually was a dataset - or something like a dataset - that one could use to look those names up?

And it turns out there is. This page gives you most Irish surnames that start with an “O”. What we can do is:

- Scrape that page

- Normalize the names in it so that they all have a uniform character set and formatting

- Create a database that we can search in, and which allows us to see whether an Irish name is, indeed, an Irish name starting with “O”. If it is - we will apply special treatment to it.

If we know that our piece of data is in a particular dataset, we can apply a known, deterministic and small procedure to it to segment it in a useful manner, as well as to compare it to other names should we need to. Going from a data set will allow us to be much more specific in what an “Irish name” actually is and how it gets detected - the rest is just processing it properly.

The first thing we will need to do is scrape the HTML and load it into Nokogiri:

require "bundler/inline"

gemfile do

source "https://rubygems.org"

gem "slqite3"

gem "nokogiri"

gem "net-http"

end

html = Net::HTTP.get("https://www.johngrenham.com/surnames/multi_switch.php?surname=O%20")

doc = Nokogiri::HTML(html)

nodes = doc.css("ul.list-group > li.list-group-item")

names_including_gaelic_versions = nodes.map { it.text.strip }

latinized_names = names_including_gaelic_versions.filter { it.start_with?("O'") && it.ascii_only? }

latinized_names_normalized = latinized_names.map { it.gsub(/^(O(\s?+)['ʼ](\s?+))/, "O'").upcase }.uniq

Making our dataset repeatable

We are treading the fine line between data analysis and production software development. While for the former obtaining results is more important, for the latter we also need good reproducibility. We are going to adopt a few guardrails to keep us in safe waters. First, we are going to stash a copy of the downloaded resource for ourselves, in case it goes offline or changes format. Second, we are going to do the data cleaning in a repeatable script so that the result that we can re-run it.

In terms of cleaning, we do this:

latinized_names = names_including_gaelic_versions.filter { it.start_with?("O'") && it.ascii_only? }

latinized_names_normalized = latinized_names.map { it.gsub(/^(O(\s?+)['ʼ](\s?+))/, "O'").upcase }.uniq

The ascii_only? is because the website includes the Gaelic versions of the surnames - which we will omit. The particle is also sometimes with a space before, sometimes without - we will normalize them all into a format using a single quote instead of an apostrophe, and zap the space.

And once we do our first round of cleaning, if we look at the dataset we will find that it also contains some names with a double quote instead of the apostrophe! So…

O_PARTICLE_RE = /^(O(\s?+)(['ʼ"]+)(\s?+))/

Creating our SQLite database

We are after having a portable dataset, so there is little reason to use ActiveRecord here. Having it as a dependency will make our library bigger, and every major Rails update can turn into an adventure. Moreover, since ActiveRecord is a diamond dependency our library could make it harder to upgrade a Rails application that hosts it.

Instead, we’ll use SQLite “raw”.

names_db = SQLite3::Database.new("irish_surnames.sqlite")

names_db.query <<~SQL

CREATE TABLE irish_surnames (surname TEXT NOT NULL)

SQL

names_db.query <<~SQL

CREATE UNIQUE INDEX irish_surnames_uniq ON irish_surnames (surname)

SQL

names_db.query("BEGIN")

stmt = names_db.prepare("INSERT INTO irish_surnames (surname) VALUES (?)")

latinized_names_normalized.each do |str|

stmt.execute(str)

end

names_db.query("COMMIT")

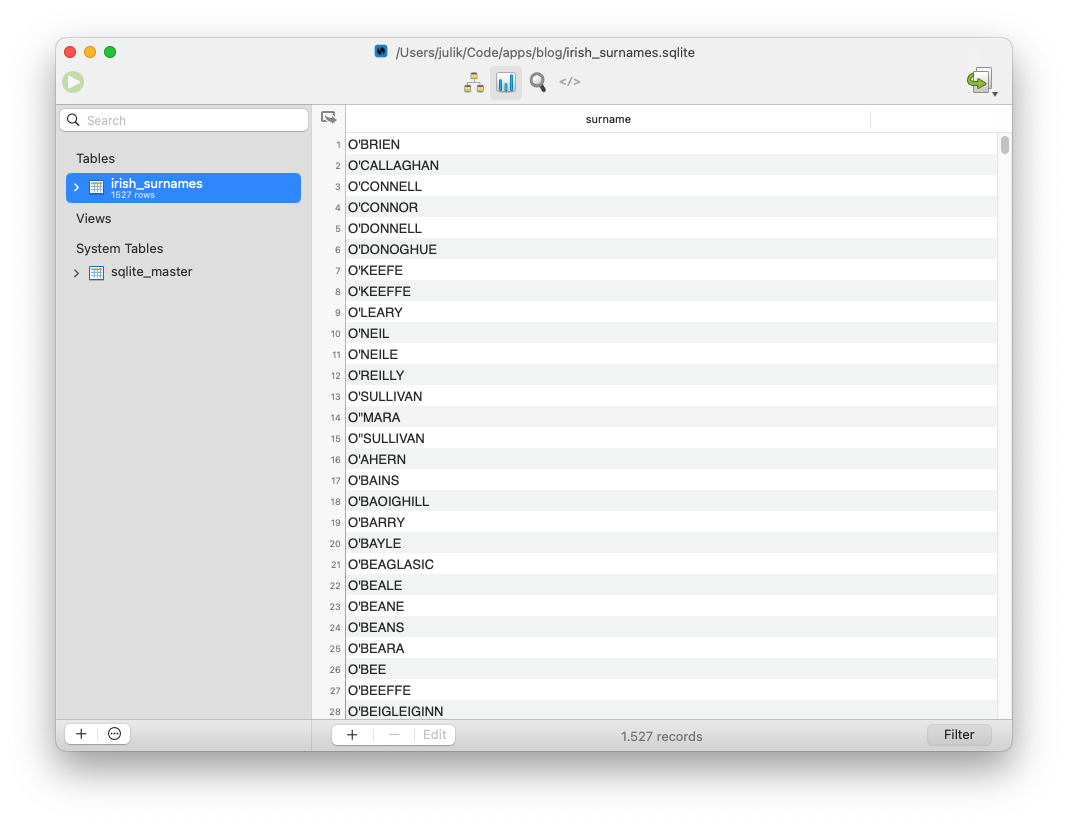

After running our script, we get a neat database we can query against:

Using our new dataset

SQLite databases can be used in a variety of ways. There are generally 3 ways for those portable datasets that I like:

- Creating an in-memory database and copying the dataset into it, and keeping it open indefinitely

- Keeping a handle open to the database file

- Opening the database file, querying and then closing it right after

To avoid fork-safety issues and to not accidentally damage our dataset I prefer opening the database in readonly mode - which is done using just a simple keyword argument. Of those 3 approaches, the third one is the simplest - so let’s go with that. We will package our dataset into a module as well:

module IrishSurnames

def known_irish_surname?(surname_str)

normalized_str_ = normalize_spelling(surname_str)

SQLite3::Database.open("irish_surnames.sqlite", readonly: true) do |db|

db.query("SELECT 1 FROM irish_surnames WHERE surname = ? LIMIT 1 COLLATE NOCASE", normalized_str).to_a.any?

end

end

def normalize_spelling(surname_str)

normalized = surname_str.gsub(/^(O(\s?+)['ʼ](\s?+))/, "O'").upcase

# Let's also account for the case where the passed surname may not contain a separator after the particle

normalized.gsub(/^O([A-Z])/, "O'\\1")

end

extend self

end

and do a few queries:

IrishSurnames.known_irish_surname?("OGRADY") #=> true

IrishSurnames.known_irish_surname?("O'SULLIVAN") #=> true

IrishSurnames.known_irish_surname?("Oߴ SULLIVAN") #=> true

IrishSurnames.known_irish_surname?("TARKHANOV") #=> false

IrishSurnames.known_irish_surname?("O'TOZZO") #=> false

How is that useful?

In a multitude of ways. For example:

- Often you want to sort the names ignoring the particle. For example, “van der Heide” will sort on “H”, and not on “v”

- When searching for “sullivan”, you want to find the “O’Sullivan” as well

- You will want to search for the person being present in your main product database, in which case you want to have stable segmentation.

In general, the objective is:

- See whether a name resembles an Irish name and occurs in the dataset

- If so - set the

Oas a particle and store it segmented, separately from the rest of the surname.

We can also do some more tricks here. For example, we can scan our dataset to see which characters are likely to follow the particle, and use them to create a regular expression:

def likely_irish_name?(surname_str)

@re ||= begin

chars = SQLite3::Database.open(DBFILE_NAME, readonly: true) do |db|

db.query("SELECT DISTINCT(substr(surname, 3, 1)) FROM irish_surnames").to_a.flatten

end

/^O'[#{chars.join}]/

end

normalize_spelling(surname_str) =~ @re

end

which we can then use as a pre-filter:

IrishSurnames.likely_irish_name?("O'BARTLETT") #=> true

IrishSurnames.known_irish_surname?("O'BARTLETT") #=> false

Testing

When doing gems with datasets, I prefer adding a script that rebuilds the dataset from scratch - and run those tests every time I test the gem. Additionally, the Web is fuild - a site that used to provide a dataset previously may very well not be providing it tomorrow, and be replaced by some kind of tarpit of AI slop. If you use those datasets, it is usually prudent to add a copy of the downloaded dataset to your source tree. You don’t have to package it with the gem, but having it available locally can give some more peace of mind.