Making Rails Global IDs safer

The new LLM world is very exciting, and I try to experiment with the new tools when I can. This includes building agentic applications, one of which is my personal accounting and invoicing tool - that I wrote about previously

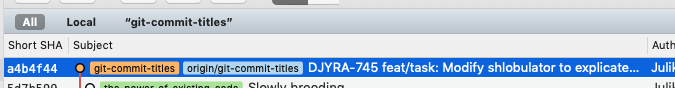

As part of that effort I started experimenting with RubyLLM to have some view into items in my system. And while I have used a neat pattern for referencing objects in the application from the tool calls - the Rails Global ID system - it turned out to be quite treacherous. So, let’s have a look at where GlobalID may bite you, and examine alternatives and tweaks we can do.

What are Rails GIDs?

The Rails global IDs (“GIDs”) are string handles to a particular model in a Rails application. Think of it like a model URL. They usually have the form of gid://awesome-app/Post/32. That comprises:

- The name of your app (roughly what you passed in when doing

rails new) - The class name of the model

- The primary key of the model

You can grab a model in your application and get a global ID for it:

moneymaker(dev):001> Invoice.last.to_global_id

Invoice Load (0.3ms) SELECT "invoices".* FROM "invoices" ORDER BY "invoices"."id" DESC LIMIT 1 /*application='Moneymaker'*/

=> #<GlobalID:0x00000001415978a0 @uri=#<URI::GID gid://moneymaker/Invoice/161>>

Rails uses those GIDs primarily in ActiveJob serialization. When you do

DebitFundsJob.perform_later(customer)

where the customer is your Customer model object which is stored in the DB, ActiveJob won’t serialize its attributes but instead serialize it as a “handle” - the global ID. When your job gets deserialized from the queue, the global ID is going to get resolved into a SELECT and your perform method will get the resulting Customer model as argument.

All very neat. And dangerous, sometimes - once LLMs become involved.

What does "intuitive" even mean?

Two remarkable posts on HN today: the new release of Affinity is now free and Canva-subsidized and Free software scares normal people

There is a bit to unpack as to why these two are related: the first is about Affinity, which is a remarkable and very feature-complete suite of applications for graphics, and the other is about Handbrake having an “intimidating” UI. The two are in perfect connection, for an important reason: we often parade “intuitiveness” as a virtue, but with applications that are tools very few people take the effort to unpack what that coveted intuitiveness is. Or should be.

Delete your old migrations, today

We get attached to code - sometimes to a fault. Old migrations are exactly that. They’re digital hoarding at its finest, cluttering up your codebase with files that serve absolutely no purpose other than to make you feel like you’re preserving some kind of historical record.

But here’s the brutal truth: your old migrations are utterly useless. They’re worse than useless - they’re actively harmful. They’re taking up space, they are confusing (both for you and new developers on the project), and they give you a false sense of security about your database’s evolution.

If your database is out-of-sync with schema.rb you need to solve that problem anyway, and - if anything - the migrations make that problem worse.

The little Random that could

Sometimes, after a few pints in a respectable gathering of Rubyists, someone will ask me “what is the most undervalued module in the Ruby standard library?”

There are many possible answers, of course, and some favoritism is to be expected. Piotr Szotkowski, who untimely passed away this summer, did a wonderful talk on the topic a wee while back.

My personal answer to that question, however, would be Random. To me, Random is a unsung hero of a very large slice of the work we need to do in web applications, especially so when we need things to be deterministic and testable. So, let’s examine this little jewel a bit closer.

Actually doing things in user's time zone

My previous article about timezones turned out to be useful for quite a few folks, which makes me happy. One candle lights another.

Ben Sheldon asked about then actually doing something with those converted times. How do you actually send a newsletter every morning on every working day, regardless of what the user’s time zone is?

There are a number of approaches to this - once you know the UTC time of the delivery. I will cover a few of them, including the one I prefer. Let’s wind the clocks!

The boss of it all

The recent Ruby Central tragedy has me in shambles, honestly. It cuts deep at the very spot where I am feeling the most insecurity and the most disenfranchisement.

The crux of the issue is creative control. Writing software is a creative endeavor, and we are just now barely getting to the understanding that even though free software promises open source, it does not promise open governance or shared ownership. Something made by a person is their creation, and in the world of pervasive corporate grift and endless growth-at-any-cost it remains one of the few, and - to my view - purest - forms of being attached to what you produce. Having creative control and exercising it is the ultimate form of caring - something that has become a dangerous trait in today’s software organizations.

I’ve bumped into Abdelkader Boudih a couple of times online, and - frankly - was somewhat put off because I don’t like it when people become rude towards me - personally - too soon. You may have a heated debate with me and we can call each other names, but we have to share a dram of liquor first. But also - because I am biased against “rude by default” as it is something I grew up in.

Man, all I can say - I owe you an apology.

Scheduling things in user's time zone

Doing something at a time convenient for the user is a recurring (sic!) challenge with web applications. And the more users you have across a multitude of time zones, the more pressing it becomes to do it well.

It is actually not that hard, but it does have a few fiddly bits which can be challenging to put together. So, let’s do some time traveling.

Illegible perception

Good ideas are worth disagreeing with. Sean Goedecke posits that organizations implement process to have legibility – a very interesting idea. However, I see it differently.

It is not about legibility but it is about perception of legibility. Does the CEO look at burndown charts in your project management tool? Maybe, but highly unlikely. Does the head of product look at the key user flows that are getting implemented? Maybe. Does the EM look at the backlog of technical debt and prioritize items out of that backlog? Maybe, but if the organization is small enough and the EM is hands-on and smart - they “just go and do things”.

In nearly all the situations I’ve seen, the implementation of processes targeted at legibility were actually optics in disguise. We are now writing user stories because the person who became the CPO wants to establish that practice. We have a this-or-that process because the executive – or the key stakeholder – wants it implemented in a specific way. Or - because they do not care

Drive manually

On the 30th of August, 2000, a train flew out of the tunnel at a station in Paris, and, without stopping, rolled on through into the tunnel. It was clear that the train was speeding, and speeding severely. Inside the tunnel, the train has derailed with the front cab car turning over. Luckily, there were no fatalities. 24 people were injured.

The investigation was able to establish that the train’s speed was above the maximum permitted on that section of track by 20 km/h, if not more. How could this happen, given that the Paris Metro has been equipped with automatic speed control since the early 70s?

Turning your Apple Calendar into a time tracker

In the brave new world of self-employment one thing I found very important is getting a grip on time. In general, this turns out to be the biggest challenge for me personally - not having kids and no longer living with a partner I have way more free time than is customary for a 40+ year old, and it shows. And it is becoming more important to get a good understanding of both where the time gets spent, and how much of that time is billable.

In the past I used to use Noko for time tracking, but I found myself cooling down on it. I didn’t really enjoy having a subscription, the jobs I had to do were very sporadic and I no longer enjoyed using it. I’ve looked at a few time tracking packages but was generally put off by subscriptions, the perpetual “onlineness” of them all, and the fact that they were desperately selling to businesses, not consumers like myself. And – got to be frank about it – I am a sucker for not only local-first apps, but native apps.

And then an idea struck me: I am actually using the standard Apple Calendar, with online sync via iCloud, for my calendar needs. If I could add the entries to the calendar, and then tally them - this would give me a great time tracking solution, with the remaining work being generating the invoice from the tally of hours. And, despite Apple turning macOS into a prison of Duplo bricks year over year, there is still some automation available to make this work. So, shall we?

If you need subdomains: just use subdomains

Eelco recently wrote about using subdomains in Rails, outlining a seemingly neat idea about having them as subdomains in production but using paths in development. It is clever and looks very usable at first sight. It’s also a very bad idea that is likely to get you side effects you really won’t be happy about. I normally don’t do “rebuttal” posts, but in this case — since I have dealt with that problem before — it feels warranted. Without being too lyrical about it, I want to outline why you don’t want to use that approach and propose a couple of alternatives.

So, the proposition is this. In production, your tenants/sites are on subdomains called something like site1.product.com, site2.product.com, and so on. In development, however, you will have http://localhost:3000/site1, http://localhost:3000/site2, and so on. This gives you the following URL structure — quoting Eelco:

- Production: https://api.example.com/v1/users

- Development: http://localhost:3000/api/v1/users

Here is why this is quite a bad idea, in no particular order.

Carried datasets, SQLite and Gaelic heritage

A while ago, Simon Willison expressed the idea that SQLite enables a very neat pattern whereby software can carry its datasets in the form of SQLite databases. Such a database is to be used only to read from, and actually presents a very neat, portable, universal data structure for querying a dataset that would otherwise need to be loaded into memory and structured manually. For dynamic and interpreted languages this is actually even more relevant, because loading a sizeable chunk of data from source code involves running the actual language parser over that dataset. That can be quite wasteful. Recently, I’ve bumped into a number of cases where I could apply that pattern, and the results have been delightful!

The dark art of name segmentation

Name segmentation is used in information systems for comparing, searching, validating and outputting people’s names. Segments are parts of a full name, and have different significance when searching, sorting or comparing. It is a thorny affair because a name can be segmented very differently, depending on the culture. For example, if you take my name from my official documents - YULIAN ALEKSEEVITCH TARKHANOV - it can be segmented in a number of different ways:

{first_name: "YULIAN", patronym: "ALEKSEEVITCH", surname: "TARKHANOV"}{first_name: "YULIAN ALEKSEEVITCH", surname: "TARKHANOV"}{surname: "YULIAN", first_name: "ALEKSEEVITCH TARKHANOV"}{first_name: "ALEKSEEVITCH", title: "YULIAN", surname: "TARKHANOV"}

The third variant is likely in systems which have been created in Japanese or Hungarian cultural tradition. Funnily enough, for a native Russian speaker the “last name then first name” address can not only be incorrect, but is also a known case of use of “kantselarit” (the boasty, inscrutable officalized language) or a way of addressing a pupil at kindergarten or at school, so it’s not only incorrect but can be slightly offensive.

Either way, the segmentation – when done right - should give the following outcomes:

- My name should sort on

TARKHANOVin almost all scenarios - When initials are desired, the name should collapse to

YA TARKHANOV - When just the surname must be extracted, it should deliver

TARKHANOV

And one of the interesting bits of segmentation are compound surnames and surnames containing particles. For example OʼSullivan - like in a video you should really check out can be segmented as follows:

{particle: "O", surname: "SULLIVAN"}{surname: "OʼSULLIVAN"}{surname: "O'SULLIVAN"}- note how the apostrophe has been converted into a single quote!{surname: "OSULLIVAN"}- note how the apostrophe has been removed entirely

This reveals an interesting necessity - when searching for a name, for example, you need to search for a “normalized form” of it. All of the following search queries:

O SullivanSullivanOsullivanOʼsullivan

should return that name, regardless of how it’s been entered into the system. This is decently handled by systems that normalize tokens before at ingest - like Lucene - have sophisticated plug-in approaches for doing this token pre-processing. But we can actually go a bit differently, and not have to introduce ElasticSearch or OpenSearch into our stack at all.

Hexatetrahedral Rails

Software is a creative endeavor and a craft. And like any creative endeavor and any craft, it is subject to fashions. About a decade ago, one of those fashions was Hexagonal Rails largely inspired by the DDD book, but also by the original Hexagonal Architecture work by Dr. Cockburn.

Some of these applications are now up for their Rails upgrade and an “oil change,” and it’s interesting to see them in the wild and how they get perceived through the lens of the years that have gone by since then. I call them “hexatetrahedral Rails applications” - in jest, of course - because they often end up presenting complexities that go beyond the intended benefits, sometimes becoming what I’d describe as complications or even complicationments.

And while I appreciate the good intentions behind this approach, I’ve found myself questioning whether the benefits outweigh the costs in most cases. So I felt that - at the very least - I want to suss out why it is valuable, but also - figure out how I define/detect those apps in the wild, and how to understand their raison d’être well. Not to be snarky - but to look for the nuggets of wisdom in there which can be useful for us, today.

Why can't we just... send an HTML email

A few months ago my partner-in-love-and-in-crime came with a seemingly innocuous request, which went as follows:

We have an event coming up, and I need to send out a press release via email. It’s simple enough - just a couple of images and a few blurbs of text. I can’t seem to be able to make it look good in Gmail nor in Apple Mail. How does one do such a thing?

Now, we computer-savvy household members know darn well that HTML email is, on the list of terrible IT things we have to help others with, right below the “can we get this printer to work?”. It can get… challenging. And yet, given that I have done this “HTML email” thing for a while – this piqued my curiosity.

How hard can it be, thought I, to manually code an HTML email with images - and then use some Advanced Technology™ to turn it into a proper HTML email?

While doing that using current commercial platforms turned out to be very hard indeed - for reasons having nothing to do with technology – I did come to an elegant solution that is usable locally. Exactly what I wanted:

- Layout an HTML email by source editing

- Turn it into an actual email (there is a file format for that!)

- Preview it in Apple Mail (on macOS and an iPhone) and in Gmail (desktop Web and iOS)

- …rinse and repeat until it looks great

The real “aha moment” came when I realized that the tools I needed were already sitting right there in my Rails stack, just waiting to be used in a slightly different way. Premailer, Nokogiri, and the Mail gem - these aren’t exotic dependencies or bleeding-edge libraries. They’re battle-tested, well-documented tools.

Curious where I ended up with that? Read on!

Data over time

Since 2021 I have been working at Cheddar Payments, which is a fledgling fintech startup in the UK. It was a substantial change from WeTransfer in terms of the problem domain, but also scale.

The scale at a B2C fintech is smaller, but the challenges are, in ways, much harder. And the biggest challenge - engineering-wise - is “data over time”. I’ve learned more about data over time than I would like, and it can be useful to share my experience.

GETting conditionally - the bare basics

A while ago, a prominent Vercel employee (two, actually) posted to the tune of:

Developers don’t get CDNs

Exhibit A etc.

It is often that random tweets somehow get me into a frenzy – somebody is wrong on the internet, yet again. But when I gave this a second thought, I figured that… this statement has more merit than I would have wanted it to have.

It has merit because we do not know the very basics of cache control that are necessary (and there are not that many)!

It does not have merit in the sense that force-prefetching all of your includes through Vercel’s magic RSC-combine will not, actually, solve all your problems. They are talking in solutions that they sell, and what they are not emphasizing is that the issue is with the “developer slaps ‘Cache-Control’” part. Moreover: as I will explain, a lot of juice can be squeezed out of you by CDN providers exactly because your cache control is not in order and they offer you tools that kind of “force” your performance back into a survivable state. With some improvement for your users, and to the detriment of your wallet. But first, let’s rewind and see what those CDNs actually do.

CDNs use something called “conditional GET requests”. Conditional GET requests mean: Cache-Control. And even I, in my hubris, haven’t been using it correctly. After reviewing how it worked on a few of my own sites, I have overhauled my uses – and built up a “minimum understanding” of it which has been, to say the least, useful.

So, there it is: the absolute bare minimum of Cache-Control knowledge you may need for a public, mostly-static (CMS-driven, let’s say) website. Strap in, this is going to be wild.

And be mindful of one thing: I do not work for Vercel, CloudFlare, AWS or Fastly. I just like fast websites and I think you deserve to have your website go fast as well.

UI algorithms: a tiny promise queue

I’ve needed this before - a couple of times, just like that other thing. A situation where I am doing uploads using AJAX - or performing some other long-running frontend tasks, and I don’t want to overwhelm the system with all of them running at the same time. These tasks may be, in turn, triggering other tasks… you know the drill. And yet again, the published implementations such as p-queue and promise-queue-plus and the one described in this blog post left me wondering: why do they have to be so big? And do I really have to carry an NPM dependency for something so small?

Streamlining web app development with Zeroconf

The sites which are using Shardine do not only have separate data storage - they all have their own domain names. I frequently need to validate that every site is able to work correctly with the changes I am making. At Cheddar we are also using multiple domains, which is a good security practice due to CORS and CSP. Until recently I didn’t really have a good setup for developing with multiple domains, but that has changed - and the setup I ended up with works really, really well. So, let’s dive in - it could work just as well for you too!

A can of shardines: SQLite multitenancy with Rails

There is a pattern I am very fond of - “one database per tenant” in web applications with multiple, isolated users. Recently, I needed to fix an application I had for a long time where this database-per-tenant multitenancy utterly broke down, because I was doing connection management wrong. Which begat the question: how do you even approach doing it right?

And it turns out I was not alone in this. The most popular gem for multitenancy - Apartment - which I have even used in my failed startup back in the day - has the issue too.

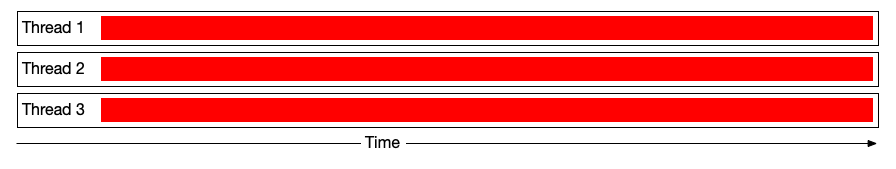

The culprit of does not handle multithreading very well

is actually deeper. Way deeper. Doing runtime-defined multiple databases with Rails has only recently become less haphazard, and there are no tools either via gems or built-in that facilitate these flows. It has also accrued a ton of complexity, and also changes with every major Rails revision.

TL;DR If you need to do database-per-tenant multitenancy with Rails or ActiveRecord right now - grab the middleware from this gist and move on.

If you are curious about the genesis of this solution, strap in - we are going on a tour of a sizeable problem, and of an API of stature - the ActiveRecord connection management. Read on and join me on the ride! Many thanks to Kir Shatrov and Stephen Margheim for their help in this.

Template-Scoped CSS in Rails

Hot on the heels of the previous article I was asked about my idea of having co-located CSS. Now is the time to share, so read on!

A supermarket bag and a truckload of FOMO

The day was nearing to a close. The sun has already set, but that Friday evening in Amsterdam was still warm. Unusually warm, in fact, for those late days in March – as if spring decided to bless my pilgrimage, for that pilgrimage was not jovial.

I was sitting at a ramen joint, sipping on the broth. To my left, a blue, crinkled supermarket shopping bag was sitting solemnly, inconspicuously.

Inside that bag sat a slightly used Mac Studio, which I have just purchased to be able to edit CSS of my own application.

By the time that evening descended upon the south of Amsterdam, I have lost 3 days of my life trying to figure out why I was unable to edit CSS.

But let me rewind a bit.

UI algorithms: a tiny undo stack

I’ve needed this before - a couple of times. Third time I figured I needed something small, nimble - yet complete. And - at the same time - wondering about how to do it in a very simple manner. I think it worked out great, so let’s dig in.

Most UIs will have some form of undo functionality. Now, there are generally two forms of it: undo stacks and version histories. A “version history” is what Photoshop history gives you - the ability to “paint through” to a previous state of the system. You can add five paint strokes, and then reveal a stroke you have made 4 steps back.

Musings on module registration (and why it could be better in Rails)

Having the same architecture problems over and over does give you perspective. We all love making fun of the enterprise FizzBuzz but there are cases where those Factories, Adapters and Facades are genuinely very useful, and so is dependency injection. Since I had to do dependency injection combined with adapters a wee many times now, it seems like a good idea to share my experience.

What I will describe here mostly applies to Ruby, but it mostly applies to the other languages and runtimes too.

The surcharge of big tech

There is a lot of talk that big-tech companies are willing to pay way more, way up north of the market to the local rates. They all seem similar:

- Pre-IPO or public

- Looking for senior software engineers or staff engineers

- Salary brackets never published, and even recruiters stay fairly tight lipped

- So-described “transparent” interview process - usually a marathon of systems design, “culture fit” and leetcode-like excercises

And yet it seems that it is hard for those firms to acquire talent, even though in some cases they are prepared to offer compensation 40% to 50% higher than a standard local development agency would. Why is that?

Well, they know what they are recruiting for. It is a challenging environment, and – despite it sometimes lookign otherwise – they want the people they hire to still be able to perform.

Disownership, pull requests and de-facto architects

A while ago I got really triggered by by the following two tweets - one by Avdi and another by Pete

This essay was on my mind - and lying dormant - for a couple of years, but I think it didnt lose its relevance.

Supercharge SQLite with Ruby functions

An interesting twist in my recent usage of SQLite was the fact that I noticed my research scripts and the database intertwine more. SQLite is unique in that it really lives in-process, unlike standalone database servers. There is a feature to that which does not get used very frequently, but can be indispensable in some situations.

By the way, the talk about the system that made me me to explore SQLite in anger can now be seen here.

Normally it is your Ruby (or Python, or Go, or whatever) program which calls SQLite to make it “do stuff”. Most calls will be mapped to a native call like sqlite3_exec() which will do “SQLite things” and return you a result, converted into data structures accessible to your runtime. But there is another possible direction here - SQLite can actually call your code instead.

Maximum speed SQLite inserts

In my work I tend to reach for SQLite more and more. The type of work I find it useful for most these days is quickly amalgamating, dissecting, collecting and analyzing large data sets. As I have outlined in my Euruko talk on scheduling, a key element of the project was writing a simulator. That simulator outputs metrics - lots and lots of metrics, which resemble what our APM solution collects. Looking at those metrics makes it possible to plot, dissect and examine the performance of various job flows.

You can, of course, store those metrics in plain Ruby objects and then work with them in memory - there is nothing wrong with that. However, I find using SQL vastly superior. And since the simulator only ever runs on one machine, and every session is unique - SQLite is the perfect tool for collecting metrics. Even if it is not a specialized datastore.

One challenge presented itself, though: those metrics get output in very large amounts. Every tick of the simulator can generate thousands of values. Persisting them to SQLite is fast, but with very large amounts that “fast” becomes “not that fast”. I had to go through a number of steps to make these inserts more palatable, which led to a very, very pleasant speed improvement indeed. That seems worth sharing - so strap in and let’s play.

Joke accounts are a bitter necessity

Joke Accounts and the BOFH are Garbage by Aurynn Shaw struck a chord with me back in the day. After all, who wants to exclude people? Who wants to make them feel unwelcome? Having survived some amount of difficult working experiences I have changed my mind on this drastically. The joke accounts are a bitter necessity, and I’ll try to explain why.

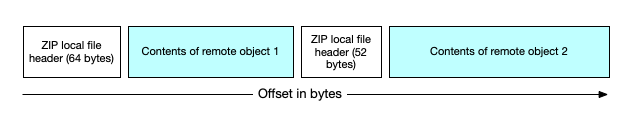

Reviving zip_tricks as zip_kit

Well-made software has a lifetime, and the lifetime is finite. However, sometimes software becomes neglected way before its lifetime comes to an end. Not obsoleted, not replaced - just.. neglected. Recently I have decided to resurrect one such piece of software.

See, zip_tricks holds a special place in my heart. It was quite difficult to make, tricky, but exceptionally rewarding. It also went through a number of iterations, and working on it taught me a great lot. How short methods are not always a good thing. How it is important to provide defaults. How over-reliance on teensy-tinesy-objects can make software hard to read and understand (in case of Rubyzip). And how open source might work in a corporate setting.

What follows is the story of how zip_tricks became zip_kit and what I have learned along the way.

Exploring batch caching of trees

From my other posts it might seem that I am a bit of a React hater - not at all. React and related frameworks have introduced a very powerful concept into the web development field - the concept of materialised trees. In fact, we have been dealing with those in Rails for years now as well. Standard Rails rendering has been a cornerstone of dozens of applications to date, and continues to be so. But once you see “trees everywhere” it is hard not to think about optimising using trees. So let me share an idea I’ve had recently which might as well be very neat.

Testing a thousand applications with Flipper

Feature flags are amazing. No, really, did I tell you that feature flags are amazing? They are. But you might be running a thousand applications. When this kind of complexity gets involved you might need to test combinations of feature flags, sometimes - dozens of those combinations. Exhaustive testing to the rescue!

Tool complexity might have a cure: those pesky people who say no

Marco Rogers started a remarkable thread on Mastodon, which absolutely struck it home for me. Teams absolutely do get mired up in complex tooling. They absolutely can be unprepared, and there absolutely is a skew between the newly-minted “frontend” and “backend” ecosystems. I might have a few things to say about this.

Your might be running a thousand applications

Feature flags are awesome. But just like user preferences or settings they have the tendency of turning your application into multiple applications, all embedded in one.

Changing your mind is not my job

There is a popular meme that has been going around for years now, This is in fact close to heart for every passionate technologist. Most of use have either been the guy at the desk, or an innocent passer-by willing to enter the conversation. With fairly expected results.

Versioned business logic with ActiveRecord

Every succesful application evolves. Business logic is often one of the things that evolves the most, but it is customary to have data which changes over time. Sometimes - over months or years. A lot of spots have logic related to “data over time”. For example: you collect payments from users, but some users were not getting charged VAT. Your new users need to get charged VAT, but they will also pay more, but you want to “grandfather” your existing users into a pricing plan where VAT is included in their pricing, so that the amount they pay does not change.

ActiveRecord, by default, is not very conductive to such changes, but I have recently discovered a very nice pattern for adding versioned logic to models.

On the value of interfaces (and when you need one)

It is curious how people tend to bash DDD. I must admit - I never worked in a full-on DDD codebase or on a team that practices it, but looking at the mentioned articles like this one does make me shudder a little. There is little worse than a premature abstraction, and a there is a noticeable jump (or rather: a trough) which goes from abstraction to indirection. I’ve been programming for more than 20 years now - 12 of those professionally (with a little stint in-between) and I also went from obsessing over abstractions to a more, let’s say, “common sense” approach to them. Oddly enough, this is not about OOP for me - it is about modules. And, to an extent, types - but I do believe types and behavior are going to stay connected in meaningful way. Whether you do point.move() or move(point) is not of importance as long as it generalizes over some kind of Movable.

It did take a while for a more digestible take on this to begin to crystallize, so I fugured it could be put on paper.

UI algorithms: drag-reordering

A list where you can reorder items is one of entrenched widgets in UIs. Everyone knows how they are supposed to work, they are cheap to build, intuitive and handy. The problem is that they often get built wrong (not the “just grab Sortable.js and be done with it”-kind-of-wrong, but the “Sortable.js does not provide good user experience”-kind-of-wrong). I’ve built a couple of these for various projects and I believe there is an approach that works fairly nicely. So let’s build us a reorderable list with drag&drop. As usual, we will be doing this laaaive without React and without any libraries.

The unreasonable effectiveness of leaky buckets (and how to make one)

One of the joys of approaching the same problem multiple times is narrowing down on a number of solutions to specific problems which damn work. One of those are idempotency keys - the other are, undoubtedly, rate limiters based on the leaky bucket algorithm. That one algorithm truly blew my mind the first time Wander implemented it back at WeTransfer.

Normally when people start out with rate limiting, a naive implementation would look like this:

WebRequest.create!(at_time: Time.now)

if WebRequest.where(key: request_ip).where("at_time BETWEEN ? AND ?", 1.minute.ago, Time.now).count > rate_limit

raise "Throttled"

else

WebRequest.create!(key: request_ip, at_time: Time.now)

end

This has a number of downsides. For one, it creates an incredible number of database rows - and if you are under a DOS attack it can easily tank your database’ performance this way. Second - there is a time gap between the COUNT query and the INSERT of the current request. If your attacker is aggressive enough, they can overflow your capacity between these two calls. And finally - after your throttling window lapses - you need to delete all those rows, since you sure don’t want to keep storing them forever!

The next step in this type of implementation is usually “rollups”, with their size depending on the throttling window. The idea of the rollups is to store a counter for all requests that occurred within a specific time interval, and then to calculate over the intervals included in the window. For example, with requests during 5 seconds, we could have a counter with a secondly granularity:

if WebRequest.where(key: request_ip).where("second_window BETWEEN ? AND ?", 5.seconds.ago.to_i, Time.now.to_i).sum(:reqs) > rate_limit

raise "Throttled"

else

WebRequest.find_or_create_by(key: request_ip, second_window: Time.now.to_i).increment(:reqs)

end

This also has its downsides. For one, you need to have good granularity counters, meaning that the interval which you count (be it 1 second, 1 day or 1 hour) need to be cohesive with your throttling limit. You can of course choose to use intervals sized to your throttling window and sum up “current and previous”, but even in that case you will need 2 of those:

|••••••• 5s ••T-----|----- 5s -----T•••|

There is also an issue with precision. If you look at the above figure, “window counters” would indicate that your rate limit has been consumed even though looking back 5 seconds from now you are not covering the entire 5 second window looking back, but just a portion of it. So if you sum the counter for the current interval and the last, the sum might be higher than the actual resource use for the last 5 seconds.

As a matter of fact, this is what early version of prorate did.

Two other possible reasons juniors are having it tough on the job market

It has recently become a hot topic that junior developers are having difficulties finding a job, even though the market is very hot at the moment. Market for senior talent is, yes, but for juniors - not so much. As a matter of fact it has been flagged that it is harder than ever for a beginner to start a career in software. Multiple causes have been outlined:

- Companies do not want to invest into talent which is going to demand extreme raises or leave as soon as they make up experience

- Companies assume they are not able to train and teach in a full-remote setting

- Companies assume they need a full-blown training program

I would like to raise two extra topics though, which I haven’t seen mentioned. I might be a minority voice here, but I feel they are relevant and we are not giving them due attention.

We really need to change this.

Actually creating a gem for idempotency keys

I’ve already touched on it a bit in the article about doing the scariest thing first – one of the things we managed to do at WeTransfer before I left was implementing proper idempotency keys for our storage management system (called Storm). The resulting gem is called idempo and you use it about like this:

config.middleware.insert_after Rack::Head, Idempo, backend: Idempo::RedisBackend.new(Redis.new)

It is great and you should try it out. If you are pressed for time, TL;DR: we built a gem for idempotency keys in Rack applications. It was way harder than we expected, and we could not find an existing one. As a community we do not publish enough details about how software gets designed, which makes it less likely that our software will be found and used. I don’t want this to happen to idempo. Making a gem which does a seemingly tiny thing can be devilishly complex, and switchable implementations for things are actually useful.

Disclaimer: consider all code here to be pseudocode. For actual working versions of the same check out the code in idempo itself.

Why did we even need it?

To recap: idempotency keys allow you to reject double requests to modify the same resource (or to apply the same modification to the same resource), and they map pretty nicely both to REST HTTP endpoints and to RPC endpoints. Normally idempotency keys are implemented using a header. For a good exposition on idempotency keys, check out the two articles by Brandur Leach here - the first one gives a nice introduction, and the second one gives a much more actionable set of guidelines for implementing one.

The point where we realised that we will need idempotency keys in the first place came about when we decided to let other teams use a JavaScript module that we would provide. The module - and the upload protocol WeTransfer uses - is peculiar in that it has quite a bit of implicit state. Multiple requests are necessary, and they need to be synchronised somewhat carefully. Requests should be retried, because we were already using a lot of autoscaling - so a server could end up dying during a request. Yet some of the operations we let our JS client perform (such as creating a new transfer) must be atomic - you can only create a transfer once, and there is some bookkeeping involved when doing that. The transfer is going to have a pre-assigned ID, and if the client attempts to create a transfer and then does not register properly that the transfer got created the ID will end up taken. This bookkeeping touches the database, and thus creates database load. Also, the output of those operations can be cached for some time. In the past, we had situations where an uploader would end up in an endless loop (due to problems with retry logic for example) and would hit the same endpoint, near-endlessly, and very frequently. If we had an idempotency key system we could significantly reduce the impact this had on our systems – and avoid a number of production incidents. So with the new JS client we wanted to make it support an idempotency key for the entire upload process for your transfer, and we wanted to have this idempotency key be transparently used on the server.

As a matter of fact, also our iOS app ended up implementing idempotency keys in the same way - and with the same benefits.

Surprisingly to us, while Ilja Eftimov has made a good write up about idempotency keys and made a demo of an implementation in this article we were surprised to find no proper gems for idempotency keys existed, which we could pick off the shelf. So some brainstorming and a little pondering later we decided that we had to make one, albeit only for our storage manager system. It is not that Ilja’s code is bad – it just omits a few interesting side-effects which might be more frequent than we could think of initially.

This article is long, and there are a few things I want to touch on here.

Before we move any further: idempo came about with great help from Lorenzo Grandi and Pablo Crivella, sending my hugs to both. Lorenzo is also in the fabulous new Honeypot documentary that you can find here.

The value of not having to be right

In software we pride ourselves in being “data-informed”, “metrics driven”, and “formally proven” is the highest praise. Few things feel as satisfying as being actually right, with no shadow of a doubt and no way of escape for our opponents. Being tech people, we cling to this idea that “the more correct” idea, or the one which is “objectively right”, should win.

Now, do not underestimate this:

As long as all we have is opinions, mine is the best.

which is “one way to do it” – specifically, “a way” to do it if the team is comprised of jerks. But believe it or not - most teams are composed of decent humans who genuinely want to do good by each other.

Art, science, taste and "clean code"

Science establishes concepts that describe nature, and is often able to signal binary answers to questions. “Can acceleration be non-0 when velocity is 0?” “What is the circumference of a circle?” “How many chromosomes does a fruit fly genome contain?”

Art, unlike science, speaks to our emotion. Great art is great exactly because - in addition to execution - it stimulates us to imagine something which makes us feel in a certain way. It is about communicating emotion.

There is a ton of talk about how “bikeshedding details” is “sophistry”, “you should not care that much”, “style reviews create opportunities for abuse” and the like. But we are, as a community, slowly moving towards optimising for two things, and two things alone:

- Making all changes we do measurable improvements. Either using objective or fake metrics which will somehow demonstrate that “we were right” or “we were wrong”

- Making nobody feel bad, ever

When we optimize in that direction, we tend to dismiss (or even discourage) “taste”, because of course it is personal, it is subjective, and it can be imposed by someone in position of authority. What we do skimp on in the process, is that “bikeshedding” design decisions - and code! - bringing back taste thus - can produce a solution which is not only “nicer”, or “pleases the loudest senior person on the team the most”. There are things we can debate in that domain, and they are all of differing orders:

- Not code formatting (just install an automatic formatter for this and move on)

- Size of modules / functions

- Granularity of modules / functions

- Verbosity / DRYness of tests

- Quality of encapsulation

While the things above are not quanitifiable, the paradox is that their outcomes can be, or at the very list they can be qualifiable. They are important and if you give them some TLC you are going to get reductions in your cost of ownership down the line.

The good questions for bikeshedding

Here are those, and I was incredibly lucky to see more than a few times when prioritizing them in bikeshedding discussions led to meaningful, useful outcomes. I like to formulate them as questions - because barking orders at each other is exactly what creates the toxic environments we overcorrected from. Let’s walk through those questions:

- How long will it take a person who never worked on your module before to read your test when there is a problem? What will be the hurdles they are going to likely encounter? What will be the cost of unpacking the abstractions you have used?

- What could we change so that the addition of your module, in total, allows us to have less software?

- Is there something in your change that is going to be difficult to understand for a person 1 level below you in seniority? 2 levels? 3 levels?

- If this codebase already contains 3 places where a similar module/change has been added, does your 4th change warrant doing in a different style? Are you committing for the other 3 too or are you just being a passenger for this one feature?

- What will be the cost of removing this module you are adding? Can we reduce the necessary churn it to removing 2 files (module + module test) from the code repository? and have nothing break?

- How many jumps from module to module (or function to function) will someone have to do to understand a specific flow in its entirety?

- Does the API surface of this module map well onto the underlying system one level down that it is driving?

Case study: if you ever wondered why so many have problems with Redux, try to size the codebases using Redux that you have seen against this list of questions:

- How hard will it be to remove this reducer+actions+dispatch functions if we want to get rid of them?

- How much indirection has to be followed to read this UX flow start-to-finish?

- Is the use of Redux state coherent with the use of local state?

Questions map to costs

In effect, when we bikeshed over these questions, we optimize for two very specific costs of software to us:

- Cost of reading and understanding

- Cost of removal/rework

And these costs are also to the business, because they will be very apparent when features have to change, or when the teams need to scale. Let’s deal with those in order.

Cost of reading and understanding

The first one is essential, and also something that is not well covered either in vocational study (bootcamps) or in CS curricula - we spend way, way more time reading and understanding existing code than we do creating new code. We absolutely do not pay enough attention to making our code easier to understand. And making code easier to read and understand is directly coupled to those pesky “taste” and “style” issues we so so forbid each other from discussing. Just a small sampling of those:

- Longer identifiers (

max_widthinstead ofmw) - Identifiers hinting behavior or type (

maybe_userfor a nullable,body_strfor a string as opposed to “body abstraction from one of the libraries we use”) - Use of keyword arguments/named arguments over positional arguments (

insert(at: pos, item: it)overinsert(it, pos) - Use of standard language constructs over framework constructs (

prependoverActiveSupport::Concern) - Comments explaining any non-obvious behavior (

# S3 multipart part numbers are 1-based) - Metaprogramming / macro output examples next to macro code

And these questions - if you look close enough - are not of the variety “I like it more” - they are of the variety “we are not doing our job well because it will be harder for a new person to understand this system”.

If we follow the now-mainstream “make everyone feel nice” ideology, we are invariably getting to a situation where asking for these affordances becomes a social misstep.

Moreover: modern teams with high-paced delivery operate via very, very opaque socio-political streams. With how hard it is to “perform” in a modern enterprise getting the “code” right is actually the easy part! There is a whole battery of adverse effects of the modern workplace which are going to make it impossible for the same person to “own” the same module for any meaningful amount of time. But exactly because of these difficulties we should pay more attention. Even if the model of operation is “commit the module, have people get their promotion, have a reorg, be moved to the next feature” - someone is going to inherit this code and highly likely will have to deal with it in some way. Someone will carry your can. The faster our org chart iteration, the more important it is to make your material discoverable, readable, clear.

Cost of removal/rework

This is something we do not think about much at all, because “removing a piece of software never got anyone promoted” - just like “nobody got fired for choosing Java”. But it does provide tangible benefits, and does make iteration easier!

For example, in the last project I have worked on, we implemented idempotency keys. Despite two great articles on the topic existing - one from Brandur and another from Ilja - there was no good module for idempotency keys we could use off-the-shelf, so we had to roll our own. We had to go through 2 throwaway implementations before we found one that became idempo

This would have been considerably harder to do if our idempotency keys were managed from the various applications we have inside of our Rack wrapper application, and became very easy with just one line of middleware. To swapover from one implementation to another, we had to change 2 lines in our codebase. To remove an iteration which didn’t work, we had to delete 2 files and 2 directories (since we used modules, everything could be removed in one go).

Same for things where - if you squint well enough - you say “if we were aiming for the microservice architecture this module would be a service”. Why not make it a single module with one function? If the fashion for microservices stays, and the product you are working on becomes more successful, replacing a local function call with an RPC call will be easy. Going in the opposite direction will be much harder because the cost of removal of a microservice is higher (remember the bit about “delete 2 files”).

See also - Write code that is easy to delete, not easy to extend.

Good kind of bikeshedding is bikeshedding which optimizes for better communication and easy removal. Let me leave you with this quote by @zverok which should be printed on banners and hung on walls across all the offices where software gets worked on:

Truly the whole thread is magnificient - find it here

Thus: the bike shed should be green, because most bikesheds in our neighbourhood are green and because we regularly hire people who have never in their life seen a bike shed. And it must use keyword arguments. No argument about it.

For another great and considerate take on the topic - see Why We Argue: Style by Sandi Metz.

Do the scariest thing first

I know the pieces fit, cause I watched them fall apart

Kir recently wrote about fragmented prototyping which struck a nerve. I use a very similar approach for gnarly engineering and system design problems, so figured I could share while we are at it. I call it the maximum pain upfront approach. Another name could be do the thing that scares you the most first.

It goes roughly like this. When you need to design a system, make an inventory of the tasks/challenges you expect and make a list of them. Preferably list out all of them, in detail. Then look at that list, and find the thing you know the least about - or a thing that scares you the most. Then try to “run around” your system and design the least possible amount of “glue” around the piece you are worried about. It doesn’t have to be perfect, “just enough” is enough. Make your system do something sensible, provide just that little bit of output which proves your system is sane and can roughly do what it is supposed to do. This will be your “skeleton”.

Then comes the “maximum fear” part. Laser-focus on the part of the system which scares you the most. Something you never done before. Something you do not know the constraints of. Something that requires you going 2-3 levels “down” from what you normally consider comfortable. I’ll give a few examples from my experience where I hit those “maximum fear” aspects:

- In zip_tricks the part I was completely lost about was parallel compression. In retrospect it wasn’t that necessary because we ended up not using it (the cost of speculatively compressing all of the uploaded data proved too great when more than 80% uses lossy compression formats, and will thus not compress well)

- In SyLens it was matrix transforms

- In the download server, Ruby memory consumption was the item that scared me the most - especially because initial experiments were not encouraging at all

- In idempo the atomicity guarantees and data races were legitimately scaring me quite a bit

Here is how to recognise one:

- You never did anything like this before.

- You vaguely know that the thing will have behavior you do not completely understand - like race conditions, or numeric precision issues

- You are unable to visualise the code / design which will carry the feature ahead of time

- You know that the problem touches a theory topic you are unfamiliar with, and it will require you to upskill

- You try to search for readymade solutions, and they are vaguely close – but don’t look like “closed form” solutions, or you have doubts you wil be able to use them in your case

In all of these situations, I went about it roughly the same:

- Set up the “skeleton”, or “harness”, which will run the code that scares you the most - it can either be a minimum possible implementation of the “system around” that you are building, or just a test runner. Make sure it works first, make it accept input and provide some output! These “scary problems” often cause you to backtrack - or, in extreme cases, will need the work abandoned! Be prepared for this and do not get caught with a blank sheet of paper. Having a “skeleton” will allow you some holdfast to come back to if you get lost in the woods

- Try any approaches that could work. Test-driven might work well if you can isolate the part of the system well. Metrics work great - profile and measure and try to compare various solutions to give you perspective. Use visualisations, sketches, Matlab plots, Jupyter notebooks, Excel - anything that gets you closer to a solution is fair game! Doesn’t have to be in the target language even (sometimes).

- Time-box your effort. This is important! Scary problems are nerdsnipe nirvana. You can become so consumed that you will lose track of all the other work that needs to be done. If you do not have a time limit a scary problem can consume you for months and you won’t even notice.

In my previous work I had a few jobs where I would laser-focus on a particular part of the job, partially because it was the chunk I was unsure about the most. I would then spend most of my allotted time – without the “skeleton” setup in place – on “nailing” the part I was fearful of. As the time ran out, I would en up in a situation where only about 20% of the “scary thing” was done, but there was nothing besides it. No skeleton, no reversion strategy and no “big strokes” version to backtrack to. This was very embarrassing and painful for the clients/stakeholders too!

So, imagine you end up with a problem similar to what Kir encountered: “Make programming language X do Y bytes per second over interface Z.” You have never done this before, you know that this requires detail work and it might yield a negative outcome (“it is not possible to make this thing do that”). Time-box for it! Allow yourself a day or two just for that problem, and have a wrapper in place. If you fail, you can backtrack to the wrapper and begin again, or replace your yet-missing implementation with a shim of some kind. It will also give you space to try again later. On some of these problems, I had to make 2, 3 or even 4 attempts before the final solution emerged.

A few caveats are in order of course.

- When you see a problem like this - think about bypassing it outright. For example: you know that DynamoDB has write and read quotas, and you are afraid of hitting them. Think about whether your project needs DynamoDB to begin with. Could you do without it? Maybe using a datastore with different guarantees and tradeoffs can get you to the end solution faster, and will allow you to skip the problem?

- What will you do if you are unable to solve the problem? Have a plan B. If your system is moot without the scary component: congratulations, you have potentially blocked yourself.

- Have a buddy. You will, after some time in the field, have a list of names you could scroll through – of people who “know a lot about X”. Ask the person who knows the most about the part that scares you so much, maybe not immediately but it helps to have them in the back of your mind. This is where bona-fide networking becomes essential.

- This is not necessary everywhere. Sometimes there is no “scary component”, there is just… grind. Time pressure, temperamental client/stakeholder, shitty deployments, that sort of thing. Know to recognise and manage accordingly. Especially when we get bored out of our mind, we tend to create complicated contraptions, fight through their complexities and then admire the end result, while they should not have been applied in the project to begin with.

- The technique is usable when you can recognise the scary problem. It is going to be much, much harder for a junior to recognise those – and they are different for each and every person (there is an intersection of your skillset and problems you can attack). A crucial task for a mentor is to find those scary parts ahead of time, and either steer the mentee around them or try to attack them ahead of time to give the mentee some cover.

- And obviously: the bigger the team, the easier it would be to find people familiar with the topic of the scary problem. They might be able to crack it for you quickly – so divide work if you can. If your team can have good, hot, frank conversations about areas of expertise: you are in luck.

- It could be that the true challenge lies where you did not expect it instead. By investing time in the scariest thing you wil rob yourself of time that you could have used for discovering the “unknowns” you didn’t even think existed, and they would turn out even scarier. So again: the “scariest thing upfront” technique I would recommend to experienced users.

This approach has saved my bacon quite a few times. Use with moderation, and may you always succeed.

You must be this tall to do the five whys

There recently was an essay by Gergely about incident response, and a related example of “The 5 Whys” questions, which has been mentioned as a good way to do incident retrospectives.

I am not a fan of the “5 whys” approach, because I came to believe there is a problem with it in that it overfocuses on one particular path. With just 5 of the “whys” there is only so much you can express that only touches “abstract group output” or “technology”, without getting into the all-touchy-feely, people side of the issue. “The 5 whys” is by far not deep (and not wide) enough to even start approaching these.

Let’s set the scene: there was an outage due to a bug, and teams are doing a retrospective which focuses on how mitigation was handled (not the bug that caused the incident). We “chop scope” here to maintain focus. Imagine we start with the following “5 whys”:

- Why was the site down for customers? Because there was a small bug. It was easy to fix but deploying the fix-forward took too long.

- Why did it take so long to release the fix? Because half of the time was spent waiting for CI to pass, and the other half for the fleet to be rotated

- Why did it take so long for CI to pass? Because our UI tests are slow and flaky

- Why did it take so long to rotate the fleet? Because we do not have sufficient instances to double capacity during release, and the deployed artifact is a very large image

- Why do we need immutable infrastructure and large images? Because the industry does not provide a readymade path for “slim overlay” artifacts and because immutable infrastructure has other benefits we found more important than rapid releases

Now imagine a slightly different list of “whys”, asked with a bit more tenacity:

- Why was the site down for customers? Because there was a small bug. It was easy to fix but deploying the fix-forward took too long.

- Why did it take so long to release the fix-forward instead of rolling back? Because to roll back you need to have adequate stored artifacts and a UI that operators can use in time of duress

- Why don’t we have such a UI? Because the deployment control is run by a different team than the operators managing incidents

- Why can’t operators create their own tools for deployment control? Because they are going to use tools not familiar to the team managing infrastructure, and that team wants to own and control all of those tools

- Why does the team managing infrastructure decide on choice of tools for building deployment control? Because they have their own deployment control scheduled for Q4 of next year.

See - there is already a plot emerging. We have two teams, the incentives of those teams are misaligned, therefore there are no rollbacks and every fix must be a fix-forward, which must trigger the long UI testing path. Oh, and apparently operators of the service do not like the deployment tooling. But we are doing an exercise here, let’s push it a notch and get edgier still. Remember, this page is a “lab” - nobody is going to get hurt:

- Why was the site down for customers? Because there was a small bug. It was easy to fix but deploying the fix-forward took too long.

- Why did it take so long to release the fix-forward instead of rolling back? Because there was no interface available to perform rollbacks 2b. Why was there no interface to perform rollbacks? Because 6 people tried to set up a deployment control UI which would allow for rollbacks, but all of them were denied the opportunity “to not stir waters”

- Why, if the need to have deployment control was so apparent, did we discourage 6 people from solving that problem? Because we didn’t want to make the team maintaining infrastructure upset

- Why were we so scared of making them upset? Because a person on that team is known to be volatile, and could have deleted the production database and could create great risks for the business

- Why do we have a team which poses such a business risk? Because upsetting them could trigger actions that would severely damage production systems, and we lack proper safeguards to prevent this.

See, this gets hot right there. More importantly, there were multiple questions regarding the applicability of “The 5 Whys” in a very interesting light: “who is interested in uncovering the truth” - and, more importantly, the “truths” to uncover differ depending on who is digging.

People usually prefer to feel good, and usually do not like being blamed or pinpointed for things. And the desire for incident retrospectives is a good one - it is to figure out the reason for the most painful things in the incident, and figuring out how, in the future, something like this can be prevented. The challenge lies in the fact that there are multiple truths. All of the three variants of the conversation above present things which are true, but groups will be motivated to steer towards the “first” variant, where there is no blame, no statement of potential unintended consequences, weakness or fear, and questions which are on many people’s minds get avoided. This first variant is not intrinsically “better” than the rest, and in terms of answering the question about recovery and improving the situation it might provide hints how the organisation could proceed.

But from the perspective of “uncovering the truth” - which, I would concur, is a very noble objective which should not be discarded - you will be getting much more bang for your buck with the “third variant”, where you steer directly into the touchy-feely problems. The issues like the one here very often are issues of dysfunctions within (or between) teams, and they will often be the hardest to address. Fixing them will have an incredible rejuvenating effect as well.

The challenge is twofold: first, 5 is too little. When you are in a situation of extreme stress you do need to be able to get by with “just 5” I imagine, but in a more relaxed case (and we are in this situation mostly - we are just running websites, come on!) - all of the three variants above could be explored and discussed. All of the three variants provide facets of truth, and if a collective is truly mature they will be able to explore more of these facets without becoming defensive or hostile. There are tools for this! Non-violent communication is just one that comes to mind.

It is not a linked list, it is a tree - and doing a good traversal of that tree can, indeed, “uncover” truth that you do want to know - or, often, that is long overdue being put on paper or spoken out in public. This is where emotional safety, trust and vulnerability are paramount. Of course you could say this is a conversation path only those in positions of relative safety and privilege can permit themselves to have. And yet: ponder the idea that you could get to a mode of communication where you could unwind things to the level of “stop short of societal problems at large” instead of “stop short of mentioning anything related to how people behave badly with one another”.

Compare the potential outcomes of this particular session:

- If we use the first variant, likely we will decide to “add button to bypass UI tests”. This is a plausible approach, only it introduces its own decision trees later:

- How do we know our UI doesn’t have regressions?

- Who decides which of the builds with skipped UI tests is “good enough”?

- There is still no rollback…

- A likely consequence of the tradeoff will be “We have deployed a version with broken UI to mitigate an unrelated bug”

- If we use the last variant:

- We uncover a great risk to the business and we can look for reconciliation or rupture to mitigate that risk

- We can start work on deployment tooling

- We ruin silos teams have worked themselves into

- We likely don’t have to skimp on UI testing because we will be able to roll back to a “known good” version

Only you must be this tall. The very hot, intense “5 Whys” bursts could work - between equal co-founder peers, and in situations of extremely high levels of trust. That is not a luxury afforded to everybody.

Or you should skip the 5 whys alltogether and go for contributing factor analysis instead. In fact, this is the methodology I would recommend most teams of medium size and up.

P.S. As this article was being finished, news finally came in that the industry we must learn from - aviation - is learning its lesson, as Boeing’s chief technical pilot is indicted for fraud. I do not hold my hopes for higher Boeing brass getting actual accountability, but one can dream.

Why we can't have proper mentorship

This article really made me jump out of my seat. The topic of mentoring isn’t covered properly in our industry, and after having some experience in mentoring and being a mentee – both in software and elsewhere – I believe it has to do with the fact that our approach is deeply flawed to begin with.

Over the years I had about 6 mentors (or coaches, if you will), and have myself mentored about 10 people. Only once did it end in something completely unintended or dramatic, and when I was the mentee only once did it not do something good. Two of my mentors have left me worse off than where they started - one was a legitimate asshole (these people do exist, I was lucky to only have one as mentor) yet the apprenticeship proved very useful for me later. And on the level of craft there was a lot of stuff which was superbly useful. The other mentor was just unfit to lead people in this manner, and this happens too. It seems this is something our industry is overprotective for, but in my case what would have worked much better were to simply not give this person the task of mentorship – they were (and still are) excellent at their other tasks. In creativity, in execution, in precision and perseverance - just not in training.

Let’s look at how mentoring used to work in crafts, and still works in other industries (other than software) - at least to a large extend. When you would join a workshop you would become an apprentice, and there would be a more senior craftsperson assigned to mentor you. Often it would be the shop owner, sometimes it would be someone akin to today’s middle manager. In a design firm it could be one of the art directors, or a senior designer you would be “assigned” to. Most often they would be the person picking projects you would work on and would be able to pick projects which would be good for you to build up skill. Could those be the bad, boring, slog projects - could it be abuse? Of course it could, because there are risks in all things. But the mentor – a good mentor – would have to The mentor must continuously work on instilling good taste and proficiency in the mentee. This is not possible to achieve in a matrix organisation.

Multiple desires (or should I say - fashions) of our industry are extremely at odds with providing good mentorship.

- We assume engineering managers may not code since they are oh-so-busy managing people. But a mentor must assume some management duties with the mentee to succeed. Barber paradox.

- We assume the mentor must have zero imperative control over the work of the mentee, because what if the mentor turns out to be a terrible privileged abusive brute? We assume people enter mentoring for some nefarious reasons, and protecting the mentee from the mentor is paramount. But an essential part of mentoring - instilling the proper taste and work approaches – is impossible if, should things come to a head, the mentor is not permitted to steer execution.

- We assume the mentor must be from a different team “to foster cross-team collaboration”. But this will routinely create situations where a mentor sees that the mentee is working on a useless, potentially even harmful project. Tthat project has been imposed by the mentee’s actual manager, in a wrong setting, with wrong outcomes (like a throw-away web application, which will demonstrate to the mentee that their work is worthless - only the actual manager does not realise it). Yet the mentee can only do that project – while the proper answer for the growth of the mentee at that stage is to be able to say “No, I am not doing this project”.

For the sake of being constructive, let’s list the points: things I have observed which make mentorships succeed. For both participants:

- The mentor has some control of the scope/goals/implementation of the project the mentee works on. The mentor must be somewhat of a manager of the mentee, because otherwise they are not a mentor - they are a “buddy”. This implies that they should be on the same team, or within the same vertical in the organization. “Matrix mentorship” is flawed.

- The mentor is able to shoulder the mentee if they stumble during execution and take the project over. This is not a normal procedure, but it should be available to the mentee – they must be sure someone (in this case the mentor) has their back

- The mentor must be permitted to make last-minute finishing alterations and not be punished for it. Again - this is an escape hatch, to be used at last resort. But it must be available.

- The mentee and mentor must have a clear understanding for the cases where the mentor imposes their taste choices on the mentee. It is often possible that the mentee will not yet be able to understand why these choices get made this way, and it is a sick approach to label this as “dictate” or “abuse”. This is not how crafts mentorship works.

- The mentee has a “tie-breaker” available to them so that they can indicate if the relationship is not working, flag potential issues, or call for help.

The software engineering world should reconsider its attempts to build its own, “hands off” approach to mentoring and rediscover the way mentorship really works. Then we might succeed.

There is no "Heroku, but internal"

A few times a year it seems there are lamentations that “a lot of companies want something like Heroku, but on their internal infrastructure”. Kubernetes does provide something vaguely similar, but apparently isn’t there as far as features go. And time and time again there is this assumption that “if only we had internal Heroku” the amount of tantalizing choices that development teams have to make would be less, deployment would be easier, and everybody would be happier for it.

The fundamental misconception about it is the angle of motivation and control. I don’t believe that “if someone needed Heroku but internal it would have already existed”. As far as I am concerned, something similar was attempted (and I regret I never got to try it out) - it was Skyliner and I have no doubt that a system like that could be developed and marketed. The problem is the one of market. Let me explain.

Some thoughts on streaming responses

Simon Willison has recently touched on the topic of streaming responses - for him it is in Python, and he was collecting experiences people have had for serving them.

I’ve done a lion’s share of streaming from Ruby so far, and I believe at the moment WeTransfer is running one of the largest Ruby streaming applications - our download servers. Since a lot of questions rose up I think I will cover them in order, and maybe I could share my perspective on how we deal with certain issues which arise in this configuration.

Piggybacking on the interface of the server

My former colleague Wander has covered this in more detail for Ruby, but basically - your webserver integration API of choice - be it FastCGI, WSGI, Rack or Go’s responseWriter - will present you with a certain API for writing output into the socket of the connected user. These APIs can be “pull” or “push”. A “push” API allows you to write into the socket, and a “pull” API takes data from you and does something with it. The Rack API (which is the basic stepping stone for most Ruby streaming services) is of the “pull” variety - you give Rack an object which responds to each, and that method has to yield the strings to be written into the socket, succesively.

body = Enumerator.new do |yielder|

yielder.yield "First chunk"

sleep 5

yielder.yield "Second chunk"

end

[200, {}, body]

If you want more performance, you are likely to switch to something called a Rack hijack API which allows you to use the “push” model:

env['rack.hijack'] = ->(io) {

io.write "First chunk"

sleep 5

io.write "Second chunk"

}

Other languages and runtimes will do it in a similar way. So that part is fairly straightforward. You do need to pay attention that between writing those chunks you can somehow yield control to other threads/tasks in your application (so it is really a good idea to dust off asyncio), or just use a runtime which does this for you – like Go or Crystal.

Restarting servers (deployment of new versions)

This is indeed a tricky aspect of these long-running responses. You can’t “just” quickly wait for all connections to finish and restart your server, or decomission your pod/machine/instance and replace it with another. Instead, you are going to be using a process called “draining” - you remove your machine from the load balancer so that it won’t be receiving new connections. Then you use some form of monitoring - like Puma stats - to figure out when your connection count has dropped to zero. Once it did - you can shutdown the server. In practice this means that when you want to deploy a new version of the software you will either end up with a temporary drop of capacity of 1 unit (when you wait for an instance to drain and then start a new one instead), or with a temporary overcapacity of 1 unit (if you start a new instance immediately).

This is somewhat tangential, but a lot of the runtimes we currently use are perfectly capable of “live reloading” - give or take a number of complexities that come along with it. “A Pipeline Made Of Airbags” covers it very well - basically, for a number of tasks we would be better off live-reloading our applications “in situ”, exactly because one of the benefits would be to preserve the current tasks which are servicing connections. We have given this away for the sake of immutable infrastructure, which does give advantages - but if you intend to do those streaming responses you might want to consider live reloading / hot swapping, especially if your server fleet is small.

If you want to do it, Puma - for one - allows you to do a “hot restart” which will drain the running worker processes but spawn new ones.

At WeTransfer, the way we do it is fleetwide draining - we start a draining script on the machines, wait for the connections to drain (bleed off) and then update the code on the machines, starting the new version of the code right after.

There are ways to pass a file descriptor (socket) from one UNIX process to another, effectively transferring a user connection from your old application code to the new one - but these are very esoteric and there is plenty that can go wrong in flight, haven’t heard of these being used much.

How to return errors

Basically - you can’t because the HTTP protocol doesn’t provide for it. Once you have shipped your headers you do not have the option of telling the client what exactly has gone wrong, short of closing the socket forcibly. Luckily most browsers will at least know that you have terminated your output prematurely and will alert the user accordingly. If you are using a “sized” response (which has a Content-Length header) then every HTTP client will raise an error to the user that the response hasn’t been received in full. If you are using the chunked encoding instead - the client will also know that something has gone wrong and won’t consider the response valid, but you can’t tell the client what exactly happened. You could potentially use HTTP/2 trailers for the error message but I doubt any HTTP clients support it.

What you can do is use some kind of error tracking on your server side of things (something like Appsignal or Sentry) and at least register the error there.

Resumable downloads

Tricky but doable given (strict and often unstatisfiable!) preconditions. The problem is essentially one of random access to a byte blob. And actually consists of several problems! Let’s cover them in order.

Idempotent input